DFuseNet: Deep Fusion of RGB and Sparse Depth Information

Published:

Code for our paper “DFuseNet: Deep Fusion of RGB and Sparse Depth Information for Image Guided Dense Depth Completion” is now on GitHub - CODE

The ARXIV paper can be found here.

We also present a small dataset of calibrated RGB and LiDAR data from a short drive around Philadelphia as an additional test set. This dataset can be found here.

Abstract:

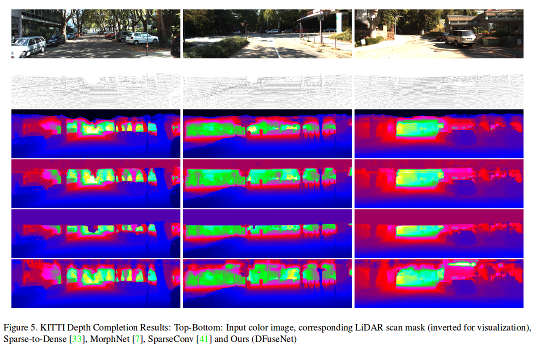

In this paper we propose a convolutional neural network that is designed to upsample a series of sparse range measurements based on the contextual cues gleaned from a high resolution intensity image. Our approach draws inspiration from related work on super-resolution and in-painting. We propose a novel architecture that seeks to pull contextual cues separately from the intensity image and the depth features and then fuse them later in the network. We argue that this approach effectively exploits the relationship between the two modalities and produces accurate results while respecting salient image structures. We present experimental results to demonstrate that our approach is comparable with state of the art methods and generalizes well across multiple datasets.